Hi Everyone,

I am trying to fit a model with a dataset which has a lot of BLQ values. It is a 2 Compartment PK model with parallell linear and Michaelis Menten Kinetics. When I set the BLQ values to LLOQ/2 and use the FOCE-ELS engine, I get a nice fit.

However, to remove the bias which is introduced by using LLOQ/2 I am using the BQL option, and the Laplacian Engine.

observe(CObs = C1*(1+CEps),bql)

and then I map the results on the CObsBQL column with BQL vaules=1 and other values =0

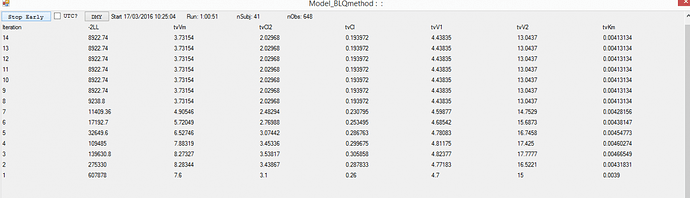

The model seems to converge in the beginning, but it stays forever in several iteration steps with the same -2LL and same fixed effects (see print screen as attachment).

Do you have a suggestion?

Best Regards and Many Thanks,

Joao

Joao,

I don’t know if this is the specific problem as there could be many challenges to overcome. But we can narrow in on the problem withe a few steps. First, sometimes the engine gets stuck during minimization (often unlikely) or it gets stuck when calculating the standard errors (ie covariance step). Did you already try running the model with Std. Err set to “None”? This will allow the engine to stop once minimization has completed.

If this works, then there is a problem with the calculation of standard errors for the parameter estimates. This may be due to model instability, or model complexity. You can also generate standard error estimates by running a bootstrap on the model to get distributions of parameter estimates.

If when you set Std Err to “None” and the minimization engine still stops, you may want to investigate to see if you have any data problems. Are there any subjects who have some sort of data error that may be causing a problem in the model? Are all the doses correct? Are there any concentrations that are wildly different that the others? Is timing accurate (dosing before observations)? Sometimes a data inconsistency may lead to the model being unstable.

I hope that helps you a bit in troubleshooting.

-Nathan

Hi Nathan,

Thank you for the advice. I will try that out, and let you know how it works.

Joao

Hi Nathan,

I tried running the model with the Laplacian Engine with Std. Err set to “none” and it still stays forever in the last iteration step. If I click “Stop Early” and inspect the results, the fit looks quite good. Etas are also well distributed so I don’t understand why the Engine keeps running forever.

I am using the Laplacian Engine because I have BLQ values. When using FOCE-ELS and setting BLQ=LLOQ/2, everything works fine. I have checked my data and I don’t think I have a data inconsistency.

Do you think the QRPEM or the IT2S-EM methods would have an advantage in comparsion to the laplacian? What would be the equivalent to the NONMEM M3 method?

Thanks a lot.

Best,

Joao

Joao,

If the results you are getting when you stop the model early are “good”, then it could be a scenario where the convergence criteria are not reached and there is a small drift in the -2LL that prevents convergence. This could be due to over-parameterization of the model or too stringent convergence criteria. Is there anyway you can sanitize the data and post the project for us to troubleshoot it further? Alternatively, you can go through our support area where you can send confidential information for troubleshooting.

Nathan

Hello Nathan,

I checked my data thourougly, and found a problem. I am dealing with “interim” data and, as you expected, I had a couple of doses which were not correct. Just a few, but it was enough to not allow the model to converge. Everything seems to be working fine now  Again, many thanks.

Again, many thanks.

Best,

Joao

I’m so glad you found the problem. Data issues are very difficult to identify, but for complex models, they create unexpected results. But, now that you have identified the issue and the resolution, you will know what to look for in future analyses!

It is still interesting to notice that even with the data problem the FOCE engines converge. The Laplacian only converged after I fixed the small problems in my dataset.